How aMiSTACX helped fashion retailer DJK NOT to break the Internet.

If you haven’t heard of men and junior fashion retailer DJK out of Ireland, you soon will. He is destined to be a household name up with the greats like Diesel and Hilfiger.

What sets him apart from everyone else is his story, and his story is one of heart, persistence, and the will to do better. Believe it or not, he started his retail business by selling just one used coat on eBay a few years ago! That’s right! He was bankrupt and in dire straits, and all he had at the time was just one used coat.

His story:

Fast-forward to 2018, and now he’s one of the fastest growing fashion retailers online and offline in the UK! With that well-earned swagger comes his notorious reputation of crashing every hosting provider he has come in contact with during his special 3x per year “All Must Go!” sales events.

Well, that was until fate crossed his path with aMiSTACX. [One of the fastest growing software provisioning companies on Amazon AWS Marketplace.]

Our story starts with DJK’s technical VAR representative out of the UK – Mr. R. Mc Broom of ePrintingUK. In need of a Magento stack, he turned to aMiSTACX back in the early part of 2018. Where he worked with me personally for his first time deployment. Right away he knew that aMiSTACX was way different than everyone else. He had his Magento 2.2.1 stack up and running in minutes, and with all of the bells and whistles of a Cloudflare CDN, HTTPS, and AWS RDS.

Looking back now on how far aMiSTACX has come since the early part of 2018, that Magento stack was top of line at the time, but it lacked the same scalability as we already had for similar WordPress stacks.

Every question he asked, we had an answer, and it took just hours before he decided to migrate everything else to aMiSTACX. Even his free Bitnami WordPress sites he asked for help on how to migrate over, and of course we were very willing to help.

It was the WP migration to S3 and RDS that planted the seed on the prospects of horizontal scalability for Magento. He was already working with DJK, and saw the potential of a framework that just may keep the breaker of the Internet at bay.

Making Good on a Bet – Post Black Friday 2017:

https://www.facebook.com/davidjameskerrr/videos/1463667677088246/

It’s not every day that a mom-n-pop retailer can pull numbers like Kim Kardashian and defend it all on a modest IT budget.

Right away McBroom asked, “Can you do the same with Magento?” I had to laugh as we already had the Magento S3 plugin planned.

“Yes! We should have it available by late March,” I said. [Leaving plenty of time to test for the June 28th sales event.]

Well, things do not always go as planned. No matter how much you plan, and the same held true with our S3 development project. He was a little nervous when I told him it wouldn’t be delivered until late June, but he just had amazing trust with us, and lacked confidence with going with Plan B – a Free S3 plugin on GitHub. So he remained patient knowing somehow we’d come through.

Migration to the Lastest & the Greatest

Meanwhile, we perfected our Magento stacks to the point that his early 2018 Magento stack was now obsolete. When we started to work together for the DJK sales event, one of the first things I told him was that old stack had to go! He was hesitant as first, but trusted my experience and reputation, and gave me carte blanche to migrate everything over to the new stack.

And boy did we deliver! We migrated Magento 2.2.1 to our latest and greatest stack Magento 2.2.3 RDS stack, and it reduced his times from the old aMiSTACX stack from about 24 seconds to about 4-5 seconds. Then with proper tuning and up against a really crappy theme, we were able to drop times down to 3-4 seconds with consistency. Mind you this was now on a t2-Medium vs. his old t2-Large.

We were running short on time, like the sale was only about eight days away, and we had to hold off on upgrading to Magento 2.2.3 and fixing the theme’s bugs to even shave off more time, but that’s the real world. Even though I knew 2 secs was possible, we had to settle for 3-4 seconds.

On a nail-biter round the clock hackathon, we were able to complete the S3 plugin, now called the S3R-Alpha module, with just 2 days to spare.

Two days to install, sync, and load test a beta module is not much time. And of course these are not under ideal conditions. Yeap and of course we ran into problems. Some junk erratic 3rd party Magento plugins… an issue with the S3 module that was unforeseen… and a few more gremlins.

Development Load Testing

With just about a day to spare we were able to start load testing our stacks behind an AWS load balancer with AWS auto scale employed.

Here we were setting up a true 3-tier architecture for Magento 2. For the first time in many respects. The way it was planned was use Cloudflare upfront to handle the CDN and DNS, aMiSTACX held the EC2 web tier, and AWS RDS held the backend. The aMiSTACX S3R-Alpha plugin moved all of the Magento media to S3, and a DNS CNAME was created to route all of the media requests through the Cloudflare CDN at the edge. This was the key to horizontal scaling, and allowed the use of AWS auto scaling to distribute the middle-tier web load.

Mind you we are talking about Cloudflare, and not to be confused with AWS CloudFront. aMiSTACX has used both, and can swear by the efficiency and easy of use of Cloudflare over AWS CloudFront, even though aMiSTACX is practically a 100% AWS shop, Cloudflare wins.

The load generation script that was selected would simulate 1000 users per minute hitting the web servers, and we started our auto scale group with five t2-large, and with a 65% metric to signal to AWS AS to spin up another EC2 and add it to the middle-tier web farm.

On the backend, we had a single RDS t2-db-2xLarge.

Our first tests, in dev, yielded results that were very reassuring. We scaled flawlessly to 10 servers, and maintained about a 10% load on the database.

We both new the database was the weakest link, and I tried to push for an implementation using AWS Aurora, and to run a MySQL load test, but we just didn’t have the time. At this point, we had less than a day available to configure production with the new module, sync to S3, and get our auto scale tested and configured properly.

We completed the production cut-over in a few hours, but had no time to run real load testing [something I really wanted to do] on production, but we couldn’t because the site had to be put out of public view because of the sale price changes.

A simple solution to accomplish this circuit breaker was to create a page rule on Cloudflare that would route all traffic to a static HTML page hosted on an S3 bucket. All with one simple flick of a switch, and it worked perfectly for what we needed.

With eight hours to go, Mc Broom made the call to go with ten t2-large EC2s and a single RDS t2.2xLarge database server.

It was getting late for both of us, due to time differences, and I knew I would have to be awake at 3am EST to be on-call to assist in any emergency.

10 Minutes Prior to the GO GO GO sale!

Mc Broom pinged me on Wickr, and I had some last minute reservations and suggestions that I wanted to use to make sure bogus traffic stayed off of our webservers. I wanted to block or restrict countries where DJK had little or no sales. [A preemptive strike to reduce the spike.]

This way, bot traffic and tire-kickers would not be part of the opening flood. Additionally, I wanted to stop all crawlers from hitting the servers. Also, I wanted to bump the scale threshold to 77% as 65% was just way too low, and I feared too many servers would flood the database with cron activity. [Something Magento is known to do.]

But we had no time to do it all! Luckily Mc Broom had just enough time to bump the AS to 77%, in hindsight that helped us a lot, and it probably was the very single thing that prevent RDS from crashing in the first two minutes.

At 9am sharp, Mc Broom flipped the switch on Cloudflare, and now the flood gates were open. Compare it with a user base of a small city all hitting the web farm all at once. It’s that initial stampede that caused all other prior hosting provider’s implementations to fail.

Embracing the Spike & Saying a Prayer

All was going well for the first minute or two, as all we noticed was some slight front-end lag while the extra capacity started to come online, but all eyes were now glued to the MySQL server. Sure enough resource utilization kept climbing as the DB server just couldn’t keep up. 70%, then 80%…then 90%…

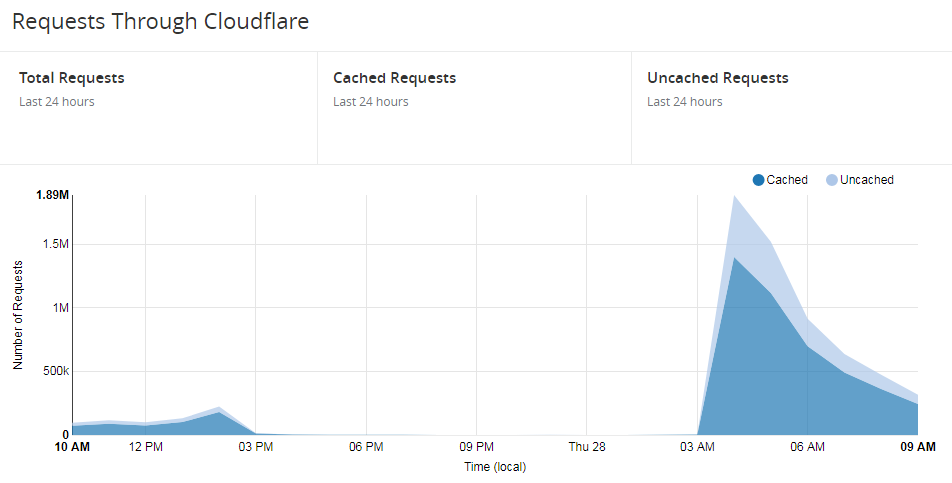

Notice the spike and the caching ratio 😉

and it kept climbing and we were just a few minutes into the sale spike.

Mc Broom at this point was a little nervous, and we both feared the worst – if this keeps up we will crash the MySQL server, and all would NOT be a good day. Even with the DB set up in a Multi A-Z, it would take a lot of time to spin-up a replacement. Also a crash could mean data loss or data corruption.

Missing the Iceberg

Immediately, I sprung into action and directed Mc Broom to siphon off all of the unnecessary traffic, and just keep the essential UK-Euro shoppers. Cloudflare makes this a breeze with the Web Access Firewall feature. A quick 30 second tutorial with Mr. Mc Broom and then he sprang into action. First China, then Russia, then India, all the huge originators of a lot of junk traffic.

That started to work immediately! The MySQL server edged off of 98% and started to drop. Yet Mc Broom was concerned that no orders were coming through yet and asked for my advise. He wanted to scale up a level on the DB, but I told him that would take everything offline during the modification, and what happens if the next level crashes?

My only suggestion was to create a read replica, at the very least if the main database failed, we could simply promote the read to primary. Sort of an insurance policy. Also, I thought I may be able to find a way to make use of the read replica for read operations, but there was simply no time to even test such a solution.

Orders are Flowing

So he ran with it. In order to do this he decided to restrict all incoming traffic for a few minutes while the replica spun-up. We were probably only 7-8 minutes into the sale when we kicked this off. As this operation played out we noticed the CPU start to drop on the database, and orders were now processing. A huge sign of relief!

SALE in full flow… Fresh stock added ?https://t.co/8kWqbbmAvW pic.twitter.com/GTdsezy52e

— David James Kerr (@MrDavidJKerr) June 28, 2018

With the replica in place, the main flood gate was re-opened, but this time the network was able to handle the capacity. A glance at the middle-tier showed 15 servers! That’s 5 more that spun-up to handle the initial spike, but now were all primed to take just about anything up to 77%.

Whew… we made it, as we watched the RDS utilization continually drop. Speed on the frontend was decent – no crashes – and all improvement going forward every second – every minute. “Chipping away at it” as DJK likes to say.

For me it was back to bed, and for Mc Broom it was a day of running all over the place taking care of other fires.

Lessons Learned

In our post discussion on the sale, we both knew that the DB going in was just way too small. It would have been best to go in with double projected capacity to be on the safe side. [Wasn’t my call and not my dime.]

For the next big sale, Black Friday 2018, I told Mc Broom that DJK will be running state-of-the-art. He’ll probably have an efficient theme that runs in milliseconds, and we’ll make use of a clustered HA Aurora backend. Next time it will be Titanium.

Success!

This morning’s orders ?

Get on it…. In-store & online

Save upto 80% OFF #StoneIsland #CPCompany #CanadaGoose #Dsquared2 #PaulandSharkhttps://t.co/8kWqbbmAvW pic.twitter.com/Whfx9Amlo7— David James Kerr (@MrDavidJKerr) June 29, 2018

5-Star Support

As for my part and aMiSTACX, our only part of this Britannic sale was to deliver the S3R-Alpha Module, and advise on setting it all up. I’d have to say we delivered and plus some. My team spent more than a month on this project, and I spent dozens of hours assisting.

In return for all of our efforts, I’m extremely happy to have a little badge on DJK’s site that says “Powered by aMiSTACX“. As half my heritage is from Ireland, it’s a fitting tribute to the Fighting Irish.

Weaponize your Business with aMiSTACX!

~ Lead Robot

PS. Our S3R-Alpha / S3Sonic Modules are now available on all S3 Titanium stacks.